In recent years, trust in “go-to” sources of information has been eroding. The very idea that one can open a page on Wikipedia and find neutral, accurate facts is no longer as sure as it once seemed. Scholars note the “erosion of shared standards for what counts as trustworthy information”. Meanwhile, although Wikipedia remains one of the most visited websites in the world, questions about its reliability persist. Wikipedia articles have an estimated 80% accuracy rate compared with 95–96% for more traditional sources – as distrust grows and usage habits shift, the mission of Wikipedia, once hailed as a revolutionary open-knowledge encyclopedia, is under threat.

The story of Wikipedia started with a wonderful idea: freely available, collaboratively built knowledge for everyone. It promised to democratise access to information, and for many years it delivered. But that promise is being undermined when the editing process is manipulated, when key context is removed, or when ideological or political agendas subtly distort what readers come to believe.

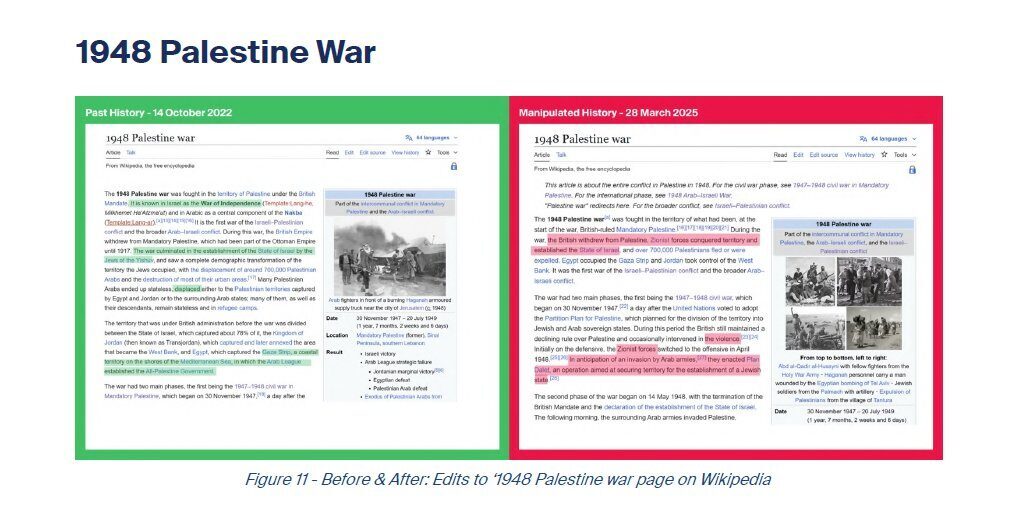

That is why the work of researchers like Dr. Shlomit Aharoni Lir is so vital. In her report, Manipulated History: Past Version vs. Present Subversion, she shows how Wikipedia entries around Israel, Zionism and the conflict have been rewritten over time – how versions that once included important historical context have been replaced by versions that remove or de-emphasise those contexts.

By comparing past and present versions of selected articles, her research reveals how persistent edits can shift public understanding. The open editing model of Wikipedia, while powerful in principle, becomes vulnerable when small actor-groups exploit it to insert bias, remove nuance, or exclude certain voices.

Why does this matter? Because Wikipedia isn’t just another website. It is used by millions of people; students, educators, journalists, and even artificial-intelligence tools – as a shortcut for “what everyone knows”. Distortions on Wikipedia therefore ripple outward. They shape textbooks, media references, algorithms and public perceptions.

So when someone like Dr. Aharoni Lir flags that distortion, she isn’t just doing academic work: she is protecting the baseline of public knowledge. Her work helps ensure that when someone opens a Wikipedia page on a sensitive topic, they are getting the fuller story – not a distorted version.